Microservices are truly remarkable, revolutionizing the way applications are constructed, managed, and supported within the cloud environment. They play a pivotal role in the cloud-native paradigm, enabling effortless scalability of assets and proving to be a perfect fit for the fiercely competitive market. The extraordinary achievements of Monzo bank and Uber serve as compelling evidence that cloud-native and microservices architecture are undeniably the future. With a digital-first mindset, both Monzo and Uber have embraced minimal infrastructure maintenance costs, exemplifying the immense value of this approach.

Take Monzo, for instance. They have meticulously developed their core banking system from the ground up, leveraging the unparalleled flexibility and scalability of the AWS cloud infrastructure. Through the adept utilization of microservices architecture and employing container tools like Docker and Kubernetes across multiple virtualized servers, Monzo has successfully attracted a significant proportion of millennials to its customer base, owing to its streamlined and user-friendly approach. As the cloud- native approach continues to gain traction, it becomes imperative to delve into the challenges and benefits that organizations encounter when utilizing microservices, which lie at the heart of the cloud- native philosophy.

Capitalizing on the Cloud: Understanding Cloud-Native Architecture

How can organizations break free from mainframes, monolithic applications, and traditional on- premises datacenter architecture? The answer lies in adopting a cloud-native approach, which empowers modern businesses with unparalleled speed and agility.

Cloud-native applications are built from the ground up, specifically optimized for scalability and performance in cloud environments. They rely on microservices architecture, leverage managed services, and embrace continuous delivery to achieve enhanced reliability and faster time to market. This approach proves particularly advantageous for financial services, banks, and capital markets, where a robust and highly available infrastructure capable of handling high- traffic volumes is a necessity. The key components of a cloud-native approach are as follows:

- Cloud service model: Operating on the fundamental concept that the underlying infrastructure is disposable, this model ensures that servers are not repaired, updated, or modified. Instead, they are automatically destroyed and swiftly replaced with new instances provisioned within minutes. This automated resizing and scaling process guarantees seamless operations.

- Containers: Container technology forms another critical pillar of the cloud-native approach, facilitating the seamless movement of applications and workloads across different cloud environments. Kubernetes, an open-source platform, takes center stage in managing containerized workloads efficiently, with speed, manageability, and resource isolation.

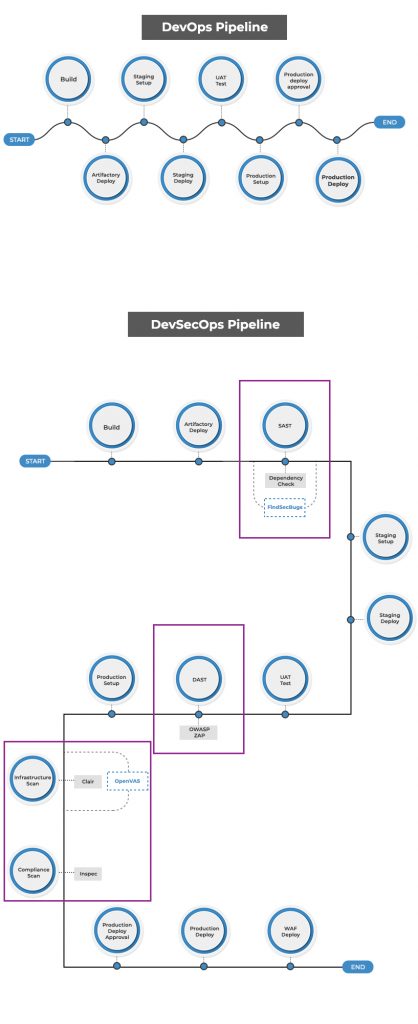

- DevOps: DevOps is a methodology widely adopted in the software development and IT industry. It encompasses cultural philosophies, practices, and tools that enhance an organization’s ability to deliver applications and services at high velocity, thus enabling accelerated innovation. This approach promotes collaboration and communication between development teams and IT operations, driving efficiency and agility.

- CI/CD pipelines: Continuous Integration/Continuous Delivery (CI/CD) pipelines focus on automating and streamlining software delivery throughout the entire software development lifecycle. By integrating frequent code changes, running automated tests, and deploying applications in a consistent and automated manner, organizations can improve productivity, increase speed to market, and ensure higher quality software releases.

Lastly, microservices play a pivotal role within the cloud-native architecture. Drawing a parallel to the Marvel character Vision, microservices are fragmented and independent, similar to how Vision remains intact despite encountering multiple setbacks on his journey to becoming a superhero. Microservices function as modular and cohesive components within a larger application structure, enabling flexibility, scalability, and resilience.

By embracing cloud-native architecture, organizations can unlock the full potential of the cloud, capitalizing on its scalability, reliability, and performance. It offers a transformative approach to application development and deployment, revolutionizing the way businesses operate in the digital era.

The Game-Changing Benefits of Microservices Architecture

Microservices offer numerous advantages. Unlike monolithic applications, where all functions are tightly integrated into a single codebase, microservices provide a less complex architecture by separating services from one another.

In the ever-growing landscape of application development and testing costs, microservices are an excellent choice for fintech and financial services due to the following reasons:

- Quick scalability: Microservices enable easy addition, removal, updating, or scaling of individual services, ensuring flexibility and responsiveness to changing demands.

- Disruption-proof: Unlike monoliths, where a failure in one component can disrupt the entire system, microservices function independently. This isolation ensures that a failure in one service does not impact the overall service, enhancing reliability and fault tolerance.

- Language agnostic: Microservices architectures are language agnostic, allowing organizations to use different programming languages and technologies for individual services. This flexibility facilitates the use of the most suitable tools and frameworks for each specific service, optimizing development and maintenance processes.

- Easier deployment: Microservices enable the independent deployment of individual services without affecting others in the architecture. This decoupling of services simplifies the deployment process and reduces the risk of unintended consequences.

- Replication across areas: The microservices model is easily replicable across different areas or domains. By following the established patterns and principles of microservices, organizations can expand their architecture and leverage the benefits of modularity and scalability in various contexts.

- Minimal ripple effect and faster time to market: In monolithic architectures, introducing new features or implementing customer requests can be a lengthy and complex process. However, with microservices, new features can be developed and deployed independently, reducing the risk of a ripple effect, and enabling faster time to market. Customers can experience desired features within weeks rather than waiting for months or years.

By leveraging microservices, fintech and financial service organizations can enjoy increased agility, scalability, fault tolerance, and accelerated innovation while optimizing development and operational costs.

Why are microservices difficult to implement?

With great power comes great responsibility and so while microservices are indeed a brilliant step toward application development, there are many challenges that make it tough to handle. However, because there are multiple depedencies associated with microservices, testing microservices-based applications is not an easy task.

Here are some of the challenges that teams face when implementing microservices:

- Collaboration across multiple teams: The existence of multiple teams working on different microservices can lead to coordination challenges. Ensuring effective communication and collaboration between teams becomes crucial to maintain alignment and avoid conflicts.

- Scheduling end-to-end testing: Conducting comprehensive end-to-end testing becomes challenging due to the distributed nature of microservices. Coordinating a common time window for testing all interconnected services can be difficult, especially when teams are working across different time zones or have varying release cycles.

- Isolation and distributed nature: Microservices operate independently, which brings benefits but also challenges. Working in isolation can make it harder to ensure seamless integration and coordination between different microservices, potentially leading to compatibility issues or inconsistencies in functionality.

- Data management complexities: Each microservice typically has its own data store, leading to data management challenges. Ensuring data consistency, integrity, and synchronization across multiple microservices becomes critical to maintain a holistic view of the system.

- Risk of failure: With the increased number of services and their interdependencies, the risk of failure also amplifies. A failure in one microservice can potentially affect other dependent services, leading to cascading failures and system-wide disruptions.

- Bug fixing and debugging: Identifying and fixing bugs in a microservices architecture can be more complex than in monolithic systems. Since microservices work in isolation, debugging and troubleshooting require careful analysis and coordination among different teams responsible for individual services.

Resolving the Challenges

Microservices serve as the fundamental building blocks for modern digital products and ecosystems. Their architecture offers the flexibility to choose the language or technology for rapid and independent development, testing, and deployment.

To overcome these challenges, it is essential to address the following:

- Include specialists for every layer: Ensure the presence of experts in user interface (UI), business logic, and data layer to effectively handle the complexities of microservices architecture.

- Manage turnover effectively: Acknowledge the possibility of skilled resources leaving and establish a plan to ensure seamless transitions and quality replacements.

- Prioritize dedicated infrastructure: Ensure the availability of high-quality cloud computing and hosting infrastructure capable of handling the anticipated load and traffic, guaranteeing the optimal performance of the product.

- Implement a principled DevOps approach: Given the higher risks of security breaches in microservices, adopt a rigorous DevOps approach to enhance security measures. Secure APIs play a vital role in safeguarding data by allowing access only to authorized users, applications, and servers.

- Establish service alignment through APIs: Despite working independently, microservices are interconnected within the application structure. Therefore, it is crucial to ensure proper alignment and communication between services through well- designed APIs.

- Enable dynamic communication: Microservices should possess the ability to communicate not only in the static state but also in the dynamic state. This requirement necessitates the utilization of load balancers, DNS, smart servers, and clients.

Why Choose Magic FinServ for Cloud-Native and Microservices Excellence?

Capitalizing on the power of cloud-native and microservices architecture is crucial in today’s digital landscape. However, organizations face challenges such as re-platforming and re- factoring when implementing cloud-native applications, as highlighted by an IDC survey. To fully leverage the potential of the cloud, organizations need a partner that possesses a comprehensive understanding of cloud architecture, DevOps practices, and the architectural changes brought about by microservices to support the cloud-native model.

At Magic FinServ, we have a proven track record of successfully building and delivering digital products, web apps, and services to market using agile methodologies. Our solutions are built on a structured approach that optimizes value and ensures early wins.

By partnering with us, you can expect the following benefits:

- Break the monolithic application into microservices.

- Enable a shift from waterfall to agile with a minimum viable product at the core.

- Cost management by focusing on early wins and generating incremental value.

- Ensure operational excellence with automation with CI/CD pipelines and IAC. Enhance productivity with DevOps and Agile methodologies.

- Incorporate horizontal scaling and design for performance efficiency.

- Ensuring security is baked into the DevOps lifecycle.

If you would like to know more about you can write to us mail@magicfinserv.com